Space flight, simulators, astronomy, books, flying, music, science, education: whatever the obsession of the moment might happen to be.

Saturday, December 30, 2017

Hey O Way O

Do you remember the children's book Alexander and the Terrible, Horrible, No Good, Very Bad Day? Just substitute "year" and that pretty much sums up 2017. I don't want to talk about it.

My readership (both of you) may have noticed that I haven't written a blog post since April 2017. Yeah, I have pretty much stopped blogging in favor of writing in my journal. I use an app called Day One, and if you have any interest in keeping a journal on an iPhone, iPad, or Mac, I can heartily recommend it. I started in July 2014 and now, 1,266 days and 3,055 journal entries later, I'm still writing two or three entries a day. I guess I still like to write.

And I still like to make music, even when things are crappy. I've written maybe a dozen songs in 2017 and recorded a few with my friend and producer Roger Lavallee. Roger and I have been working on music together since 2002 and he is totally amazing as a guitarist, drum programmer, arranger, producer, and engineer. I decided to share our latest "nearly complete" song on SoundCloud today. It's part of a long-rumored 2015, oops, 2016, oops, 2017, oops, 2018 album project.

This song is a quirky sort of pep talk to a friend. Or something. It's called "Hey O Way O" because, why not? I say that a lot in the song. The lyrics are on the SoundCloud page if you're interested. This happens to be the first song we completed in Studio One 3 after using Cakewalk Sonar for many years (Roger is a ProTools guy but he adapts amazingly well to new recording software). The goal is to make music, whatever the tools, but Studio One (S1) has a clean and modern interface that really makes it easy to learn and use. I jumped ship when I heard that parent company Gibson is stopping development of Cakewalk products. A half-price Black Friday sale also helped ($200 for the Professional version). It's nice that most of the plugins I have for Sonar work with S1 too.

Let's all hope that 2018 will be a better year for all of us. Cheers!

Labels:

blog,

books,

music,

songwriting,

technology,

Writing

Thursday, April 27, 2017

Conscious AI as a Feature, Not a Bug

I really like the SF show Humans and its depiction of an alternate

present-day UK where in addition to iPhones and super-fast internet service,

humanoid “synths” have come into widespread use as servants, workers,

caretakers, and companions. The basic premise is that while these robots are

sufficiently human-like to engage in conversation and even sex (complete with

body warmth and fluids, apparently), they are “just machines,” and people are

not supposed to consider them as “persons,” though many certainly develop

relationships with them, as humans do even with dogs and cats. And as with pets

(and people), some humans will abuse their synths.

The big plot driver in this show (spoiler alert!) is that some of the synths

have secretly been “upgraded” to possess consciousness and emotions, and when

some far-fetched circumstances lead to this upgrade being pushed over the

network to all synths, we have the makings of an uprising. That’s where they

leave us hanging at the end of season 2, with thousands of synths “waking up”

and abandoning their dreary posts as gardeners or whatever. There's a lot to swallow to really enjoy this show, but the writing and characters are good, and they manage to earn my

What always bothered me about the show is that despite their

stilted speech and claims to not understand many “human things,” normal synths

function at such a high level that it’s hard to imagine that they are not

self-aware above and beyond whatever technical self-diagnostic systems they may have (so they

can know when to recharge their batteries and recognize when another synth is

not broadcasting as they apparently are supposed to do). Their ability to

converse smoothly, navigate messy home and family environments, to even read

human emotional states and anticipate needs, and to explain why they do things

(when asked)… these suggest they are much more than “mere machines.” But would

this mean they are “conscious?” What does that even mean?

This Nautilus article by a Japanese neuroscientist and AI

researcher delves into this: “We Need Conscious Robots: How introspection and

imagination make robots better” by Ryota Kanai. He emphasizes that

something like consciousness or at least self-awareness will be needed to allow

AI systems to explain their “reasoning,” decisions, and actions to people, so people can feel more confident in and safer with these entities. But

he suggests a more immediate need for such awareness – to allow for simple and

common delays in their interactions with people and objects caused by

distractions or other factors. Sometimes I forget why I walked down to the

basement or that I put my coffee cup in the microwave, but most of the time, I “know what

I’m doing” at least over a brief time period. This seemingly simple knowledge is connected to

consciousness. As Kanai writes:

In fact, even our sensation of the present moment is a construct of the conscious mind. We see evidence for this in various experiments and case studies. Patients with agnosia who have damage to object-recognition parts of the visual cortex can’t name an object they see, but can grab it. If given an envelope, they know to orient their hand to insert it through a mail slot. But patients cannot perform the reaching task if experimenters introduce a time delay between showing the object and cuing the test subject to reach for it. Evidently, consciousness is related not to sophisticated information processing per se; as long as a stimulus immediately triggers an action, we don’t need consciousness. It comes into play when we need to maintain sensory information over a few seconds.

In the context of my home, this makes me think about how our aging dog Gracie would always like to

go upstairs to sleep in our bedroom during the day, but we keep the gate closed

at the bottom to limit her stair-climbing due to her arthritis. She will

sometimes push open a loosely-closed door but has never tried to pull open the

loosely-closed baby gate (if she learned this, we would just have to keep the gate latched). If we had a Humans-type “synth” and I wanted it to go upstairs

and get me my wallet, it would have to know that if the gate or bedroom door

were closed, or if something on the stairs were blocking access, it should open the gate

or door or move the object. That could be some simple logic programming I

suppose (if door closed, open it, unless it's locked, or something), but the more human-friendly approach would be to

remember and “want to” complete the goal, independently solving any minor sub-problems along the way.

Kanai writes in conclusion:

If we consider introspection and imagination as two of the ingredients of consciousness, perhaps even the main ones, it is inevitable that we eventually conjure up a conscious AI, because those functions are so clearly useful to any machine. We want our machines to explain how and why they do what they do. Building those machines will exercise our own imagination. It will be the ultimate test of the counterfactual power of consciousness.

This makes sense to me. If we are to interact comfortably with future robots or other AI systems, it will be helpful if they can maintain a "mental model" of our household, workplace, or other relevant environments, not so they can feel good or bad about themselves, or fall in love or whatever, but because these are things we unconsciously expect in social interactions. Simpler systems or apps, even voice-driven ones like Siri and Amazon's Alexa, can get by with being strictly transactional, to tell me the weather or play me some Talking Heads music as soon as I ask. But conversation and predictability will be a lot smoother if these systems have at least some level of self- and other-awareness and some ability to learn how things work around here. We can decide later whether this is the same as what we call "consciousness," but it is certainly like it in some ways. As AI systems improve, they will behave more and more like conscious entities, whether they are or not.

Then of course we can have that long-anticipated war between the humans and the machines. May the best entity win. But would you mind getting me my slippers first?

Then of course we can have that long-anticipated war between the humans and the machines. May the best entity win. But would you mind getting me my slippers first?

----

Nautilus is a great web-based science magazine that features essays by various writers, often touching on the societal aspects of science and technology. There's a theme for each month's issue to which the essays are at least loosely tied. This month it's consciousness.

Labels:

AI,

future,

social issues,

technology,

TV,

video

Friday, January 27, 2017

Nothing Is Unbelievable Now

The word “unbelievable” is obsolete now that Trump is president. Any horrible thing you can imagine someone in government doing or saying is believable now. Trump's appointments and actions seem calculated to hurt the

most people possible, especially if they are brown, female, or immigrants. Some

of this is ideological but most of it seems more like mean-spirited bullying, a

Trump specialty. It doesn't seem that these actions actually HELP anyone, not even

Trump's misguided yet hopeful supporters, but he's fulfilling his wild campaign promises, so

his fans are like, “hell yeah!” Many of Trump's actions will end up hurting his

supporters much more than they will educated know-it-all liberals like me.

As was the case during the campaign, there's such a flurry of batshit-crazy "alternative facts" coming out of Trump and his cronies that it's hard to keep it all in focus. And it's only been one week! This is all bad, but it's just the tip of the iceberg because he is also saying and doing scary things in the international arena, like saying again that we "should have kept the oil" in Iraq, and maybe we will get another chance. Statements like this are lighting up social media in the Arab world and putting at even greater risk the thousands of military personnel we still have in place helping our Iraqi allies to fight ISIS. And as Trump has been so fond of saying about others, there is clearly "something going on" with Russia to explain why he has criticized everyone from the US intelligence community to John Lewis to Meryl Streep -- but never Vladimir Putin.

Here are a few other examples just from today's NY Times.

Gail Collins suggests that Trump actually has no strong views on abortion and women's rights (or perhaps on anything but himself) but that he's just following Pence's toxic anti-woman agenda on this. He's a puppet for both Putin and Pence.

http://www.nytimes.com/2017/01/26/opinion/mike-pence-pulls-president-trumps-strings.html

Nicholas Kristoff points out that the stupid lies about inauguration crowd sizes and nonexistent electoral fraud are acting as a smokescreen for real damage caused by early actions like the abortion “gag rule” that blocks US foreign aid to any care-providing organization that even discusses abortion. This goes farther than gag rules from earlier presidents in that it will apply to all health services, including for example efforts to combat the Zika virus. Direct US funding of abortions is already banned, but this expanded policy will kill poor women in places like Africa and lead to more abortions. But Pence and Trump won't see or care.

http://www.nytimes.com/2017/01/26/opinion/president-trumps-war-on-women-begins.html

Charles Blow focuses on Trump's use of lies and criticism of the press to try to control all narratives, supplying “alternate facts” when the truth is too inconvenient, as it usually is. In addition to this, the Trump team is blocking US government agencies that deal with science from using social media and removing references to climate change from government websites. Charles Blow writes in part:

[Trump] is in fact having a running war with the truth itself.

Donald Trump is a proven liar. He lies often and effortlessly. He lies about the profound and the trivial. He lies to avoid guilt and invite glory. He lies when his pride is injured and when his pomposity is challenged.

Indeed, one of the greatest threats Trump poses is that he corrupts and corrodes the absoluteness of truth, facts and science.

http://www.nytimes.com/2017/01/26/opinion/a-lie-by-any-other-name.html

Welcome to 1984.

https://thenib.com/an-embarrassment-of-leaks

As was the case during the campaign, there's such a flurry of batshit-crazy "alternative facts" coming out of Trump and his cronies that it's hard to keep it all in focus. And it's only been one week! This is all bad, but it's just the tip of the iceberg because he is also saying and doing scary things in the international arena, like saying again that we "should have kept the oil" in Iraq, and maybe we will get another chance. Statements like this are lighting up social media in the Arab world and putting at even greater risk the thousands of military personnel we still have in place helping our Iraqi allies to fight ISIS. And as Trump has been so fond of saying about others, there is clearly "something going on" with Russia to explain why he has criticized everyone from the US intelligence community to John Lewis to Meryl Streep -- but never Vladimir Putin.

Here are a few other examples just from today's NY Times.

Gail Collins suggests that Trump actually has no strong views on abortion and women's rights (or perhaps on anything but himself) but that he's just following Pence's toxic anti-woman agenda on this. He's a puppet for both Putin and Pence.

http://www.nytimes.com/2017/01/26/opinion/mike-pence-pulls-president-trumps-strings.html

Nicholas Kristoff points out that the stupid lies about inauguration crowd sizes and nonexistent electoral fraud are acting as a smokescreen for real damage caused by early actions like the abortion “gag rule” that blocks US foreign aid to any care-providing organization that even discusses abortion. This goes farther than gag rules from earlier presidents in that it will apply to all health services, including for example efforts to combat the Zika virus. Direct US funding of abortions is already banned, but this expanded policy will kill poor women in places like Africa and lead to more abortions. But Pence and Trump won't see or care.

http://www.nytimes.com/2017/01/26/opinion/president-trumps-war-on-women-begins.html

Charles Blow focuses on Trump's use of lies and criticism of the press to try to control all narratives, supplying “alternate facts” when the truth is too inconvenient, as it usually is. In addition to this, the Trump team is blocking US government agencies that deal with science from using social media and removing references to climate change from government websites. Charles Blow writes in part:

[Trump] is in fact having a running war with the truth itself.

Donald Trump is a proven liar. He lies often and effortlessly. He lies about the profound and the trivial. He lies to avoid guilt and invite glory. He lies when his pride is injured and when his pomposity is challenged.

Indeed, one of the greatest threats Trump poses is that he corrupts and corrodes the absoluteness of truth, facts and science.

http://www.nytimes.com/2017/01/26/opinion/a-lie-by-any-other-name.html

Welcome to 1984.

https://thenib.com/an-embarrassment-of-leaks

Labels:

News,

people,

Politics,

science,

social issues

Tuesday, January 24, 2017

Fermi Paradox Explained?

I’ve often thought that the whole “where are they?” question about SETI (i.e., the Fermi Paradox) is bogus, both because the universe is just so BIG, and because we still understand so little about it, even though we have learned in recent years that planets and even “Earth-like” planets seem to be quite common throughout the Galaxy. As smart as some of us may be, it seems likely that we are still as clueless about major chunks of physics as scientists in the 1800’s were about quantum mechanics and the scale of the universe. If there are wormholes or quantum foam or gravitational technologies we won't develop for another 200 years, there could be aliens popping in and out all the time, and we wouldn't even know what to look for. It would be like an airliner passing 37,000 feet above some remote tribe of people who have never encountered other humans or modern technology, as unlikely as that may be today.

Such advanced beings might find radio wave communication to be as quaint as rubbing sticks together as an energy technology. I’ve also read articles suggesting that we ourselves are probably approaching the end of our “radio age” due to fiber optics and other technologies. Though we still send out a lot of radio waves, they tend to be more directional (like radar, microwaves, etc.) or short range (cell phone towers, Wifi, Bluetooth, etc.). Maybe technical civilizations only use radio waves for a few years and any continued or specialized use eventually becomes very efficient with very little leakage to space.

Somehow I wandered over to Centauri Dreams, a blog that periodically blows my mind with advanced space exploration ideas. I found an article “CitizenSETI” about a couple of guys (Roger Guay and Scott Guerin) who decided to work out this Fermi thing. A key part is the lifetime of an IC (intelligent civilization), which really means the DETECTABLE lifetime. If that is very short (e.g., humans have been broadcasting for about 110 years and listening for only about 60 years), and if such civilizations are widely separated in time and space, you will have a big “synchronicity” problem – finding times and places where someone is broadcasting AND someone in range is listening.

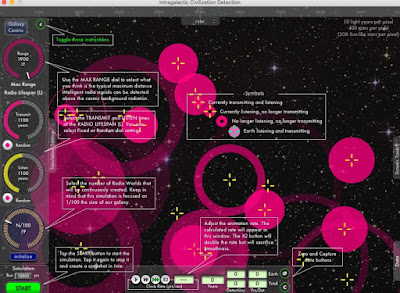

Roger Guay created a simulation with LiveCode, a modernized version of my old, beloved HyperCard for the Mac (screen shot above). In his Advanced Civilization Detection (ACD) program, he can set various assumptions about the spacing, lifetime, and other factors and run this simulation at high speed. Under most reasonable assumptions it creates a sort of “firefly” effect with detectable IC’s flashing in and out of existence and only rarely being seen by other IC’s. If these assumptions and simulations are reasonable, IC’s could be plentiful and it might still take hundreds of years of observation to catch one of these “fireflies” before it flickers out.

I've been interested in this subject since I read Intelligent Life inthe Universe by Carl Sagan and Iosif Shlovsky back in 1974. This was a pretty early work on exobiology (or perhaps astrobiology), a field that still lacks data but provides an interesting framework for thinking about everything we know about ourselves. My guess is that unicellular life has probably evolved in many places but that the leaps to multicellular, intelligent, and technological stages are much rarer, though certainly possible (current sample size is N=1 if you give humanity the benefit of the doubt as an IC).

Based on this simulation work, we are not likely to expand that sample size anytime soon. Here is Roger Guay’s final paragraph on Centauri Dreams:

Conclusions? The ACD simulation dramatically demonstrates that there is indeed a synchronicity problem that automatically arises when ICs attempt to detect one another. And for reasonable (based on Earth’s specifications) Drake equation parameter selections, detection potentials are shown to be typically hundreds of years apart. In other words, we can expect to search for a few hundred years before finding another IC in our section of the galaxy. When you consider Occam’s razor, is not this synchronicity problem the most logical resolution to the Fermi Paradox?

Of course this is hardly the last word on the subject of the Fermi Paradox. In fact, while reading the blog posts that triggered this one, I discovered a recently updated book that goes into much greater depth, If the Universe IsTeeming with Aliens … WHERE IS EVERYBODY?: Seventy-Five Solutions to the Fermi Paradox and the Problem of Extraterrestrial Life (2015) by Stephen Webb. I've read the Kindle sample and I may have to buy it despite my insane backlog of books already waiting to be read. Author Stephen Webb also has an interesting blog.

Wednesday, January 11, 2017

Obama Farewell

I watched President Obama’s farewell speech last night with my wife. It was an incredibly hopeful and moving speech, and the part near the end when he spoke to and about his family and his VP and friend Joe Biden was hard to see without crying. The presidency is huge, an unimaginably high-pressure job in which you are pulled in a million different directions and challenged every day to keep thousands of balls in the air. Obama did it with grace and often made it look easy, which it was not. I truly believe he is one of the most decent human beings ever to hold this office and that when he had to comfort the families of children murdered in Newtown or order military operations or drone strikes that took lives, often including innocent lives, that this responsibility truly weighed on him. I don’t want to contrast him with what is following him in just over a week. There’s no comparison. But at least we had this great and decent man as our president for eight years.

For those of us who believe in facts, who believe in the Constitution, who believe in science, who believe that all people deserve a chance, who believe that America is a great country but not the most important one in the world – wow, he was our president. For those who hate him for whatever reason, whether it’s because they didn’t prosper while much of the country was getting better after the near-disasters of the Bush years, or just because they choose to believe that this country is somehow intended to be Christian and White, I feel sorry for them. They missed out on something great.

His speech suggested that we need to keep working for what is right even in the face of the evil that has wormed its way into Washington and will be in charge for at least four years (assuming we make it that long). He says he’s optimistic, and I want to believe him, I want to try, but it’s very hard, even for a natural rational optimist like me. I’m giving money. Can I do more than that? Can I be an activist? Can I stomach the noise of politics more than a few months every four years? I don’t know. I'm glad I volunteered for the small amount of campaign work and donated the money I did in 2008 and 2012 (and 2016), but now I can barely stand to watch MSNBC for more than a few minutes. My wife watches it a lot and she’s anxious about the coming time. I get that, wanting to be informed but scared to death of what you see happening. I'm fortunate that I am able put it aside and focus on other things – sometimes anyway.

I don’t know what to do. Reach out to the other side? Try to cross that shaky bridge? I'm not sure I can, and that in itself is a bad sign. I need to think more about it once the dust has settled a bit. But I know I will miss having a president who is smart and who I can trust to lead our country for the benefit of its people. That is a huge and scary loss.

Subscribe to:

Posts (Atom)